- Privacy Policy

Home » Experimental Design – Types, Methods, Guide

Experimental Design – Types, Methods, Guide

Table of Contents

Experimental design is a structured approach used to conduct scientific experiments. It enables researchers to explore cause-and-effect relationships by controlling variables and testing hypotheses. This guide explores the types of experimental designs, common methods, and best practices for planning and conducting experiments.

Experimental Design

Experimental design refers to the process of planning a study to test a hypothesis, where variables are manipulated to observe their effects on outcomes. By carefully controlling conditions, researchers can determine whether specific factors cause changes in a dependent variable.

Key Characteristics of Experimental Design :

- Manipulation of Variables : The researcher intentionally changes one or more independent variables.

- Control of Extraneous Factors : Other variables are kept constant to avoid interference.

- Randomization : Subjects are often randomly assigned to groups to reduce bias.

- Replication : Repeating the experiment or having multiple subjects helps verify results.

Purpose of Experimental Design

The primary purpose of experimental design is to establish causal relationships by controlling for extraneous factors and reducing bias. Experimental designs help:

- Test Hypotheses : Determine if there is a significant effect of independent variables on dependent variables.

- Control Confounding Variables : Minimize the impact of variables that could distort results.

- Generate Reproducible Results : Provide a structured approach that allows other researchers to replicate findings.

Types of Experimental Designs

Experimental designs can vary based on the number of variables, the assignment of participants, and the purpose of the experiment. Here are some common types:

1. Pre-Experimental Designs

These designs are exploratory and lack random assignment, often used when strict control is not feasible. They provide initial insights but are less rigorous in establishing causality.

- Example : A training program is provided, and participants’ knowledge is tested afterward, without a pretest.

- Example : A group is tested on reading skills, receives instruction, and is tested again to measure improvement.

2. True Experimental Designs

True experiments involve random assignment of participants to control or experimental groups, providing high levels of control over variables.

- Example : A new drug’s efficacy is tested with patients randomly assigned to receive the drug or a placebo.

- Example : Two groups are observed after one group receives a treatment, and the other receives no intervention.

3. Quasi-Experimental Designs

Quasi-experiments lack random assignment but still aim to determine causality by comparing groups or time periods. They are often used when randomization isn’t possible, such as in natural or field experiments.

- Example : Schools receive different curriculums, and students’ test scores are compared before and after implementation.

- Example : Traffic accident rates are recorded for a city before and after a new speed limit is enforced.

4. Factorial Designs

Factorial designs test the effects of multiple independent variables simultaneously. This design is useful for studying the interactions between variables.

- Example : Studying how caffeine (variable 1) and sleep deprivation (variable 2) affect memory performance.

- Example : An experiment studying the impact of age, gender, and education level on technology usage.

5. Repeated Measures Design

In repeated measures designs, the same participants are exposed to different conditions or treatments. This design is valuable for studying changes within subjects over time.

- Example : Measuring reaction time in participants before, during, and after caffeine consumption.

- Example : Testing two medications, with each participant receiving both but in a different sequence.

Methods for Implementing Experimental Designs

- Purpose : Ensures each participant has an equal chance of being assigned to any group, reducing selection bias.

- Method : Use random number generators or assignment software to allocate participants randomly.

- Purpose : Prevents participants or researchers from knowing which group (experimental or control) participants belong to, reducing bias.

- Method : Implement single-blind (participants unaware) or double-blind (both participants and researchers unaware) procedures.

- Purpose : Provides a baseline for comparison, showing what would happen without the intervention.

- Method : Include a group that does not receive the treatment but otherwise undergoes the same conditions.

- Purpose : Controls for order effects in repeated measures designs by varying the order of treatments.

- Method : Assign different sequences to participants, ensuring that each condition appears equally across orders.

- Purpose : Ensures reliability by repeating the experiment or including multiple participants within groups.

- Method : Increase sample size or repeat studies with different samples or in different settings.

Steps to Conduct an Experimental Design

- Clearly state what you intend to discover or prove through the experiment. A strong hypothesis guides the experiment’s design and variable selection.

- Independent Variable (IV) : The factor manipulated by the researcher (e.g., amount of sleep).

- Dependent Variable (DV) : The outcome measured (e.g., reaction time).

- Control Variables : Factors kept constant to prevent interference with results (e.g., time of day for testing).

- Choose a design type that aligns with your research question, hypothesis, and available resources. For example, an RCT for a medical study or a factorial design for complex interactions.

- Randomly assign participants to experimental or control groups. Ensure control groups are similar to experimental groups in all respects except for the treatment received.

- Randomize the assignment and, if possible, apply blinding to minimize potential bias.

- Follow a consistent procedure for each group, collecting data systematically. Record observations and manage any unexpected events or variables that may arise.

- Use appropriate statistical methods to test for significant differences between groups, such as t-tests, ANOVA, or regression analysis.

- Determine whether the results support your hypothesis and analyze any trends, patterns, or unexpected findings. Discuss possible limitations and implications of your results.

Examples of Experimental Design in Research

- Medicine : Testing a new drug’s effectiveness through a randomized controlled trial, where one group receives the drug and another receives a placebo.

- Psychology : Studying the effect of sleep deprivation on memory using a within-subject design, where participants are tested with different sleep conditions.

- Education : Comparing teaching methods in a quasi-experimental design by measuring students’ performance before and after implementing a new curriculum.

- Marketing : Using a factorial design to examine the effects of advertisement type and frequency on consumer purchase behavior.

- Environmental Science : Testing the impact of a pollution reduction policy through a time series design, recording pollution levels before and after implementation.

Experimental design is fundamental to conducting rigorous and reliable research, offering a systematic approach to exploring causal relationships. With various types of designs and methods, researchers can choose the most appropriate setup to answer their research questions effectively. By applying best practices, controlling variables, and selecting suitable statistical methods, experimental design supports meaningful insights across scientific, medical, and social research fields.

- Campbell, D. T., & Stanley, J. C. (1963). Experimental and Quasi-Experimental Designs for Research . Houghton Mifflin Company.

- Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference . Houghton Mifflin.

- Fisher, R. A. (1935). The Design of Experiments . Oliver and Boyd.

- Field, A. (2013). Discovering Statistics Using IBM SPSS Statistics . Sage Publications.

- Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences . Routledge.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Ethnographic Research -Types, Methods and Guide

Case Study – Methods, Examples and Guide

Applied Research – Types, Methods and Examples

Descriptive Research Design – Types, Methods and...

Qualitative Research Methods

Textual Analysis – Types, Examples and Guide

Experimental Design: Types, Examples & Methods

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Experimental design refers to how participants are allocated to different groups in an experiment. Types of design include repeated measures, independent groups, and matched pairs designs.

Probably the most common way to design an experiment in psychology is to divide the participants into two groups, the experimental group and the control group, and then introduce a change to the experimental group, not the control group.

The researcher must decide how he/she will allocate their sample to the different experimental groups. For example, if there are 10 participants, will all 10 participants participate in both groups (e.g., repeated measures), or will the participants be split in half and take part in only one group each?

Three types of experimental designs are commonly used:

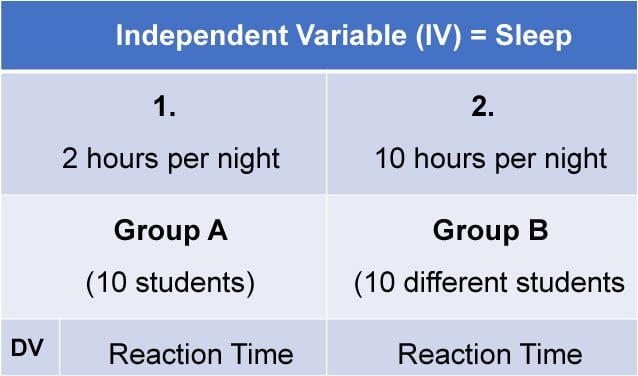

1. Independent Measures

Independent measures design, also known as between-groups , is an experimental design where different participants are used in each condition of the independent variable. This means that each condition of the experiment includes a different group of participants.

This should be done by random allocation, ensuring that each participant has an equal chance of being assigned to one group.

Independent measures involve using two separate groups of participants, one in each condition. For example:

- Con : More people are needed than with the repeated measures design (i.e., more time-consuming).

- Pro : Avoids order effects (such as practice or fatigue) as people participate in one condition only. If a person is involved in several conditions, they may become bored, tired, and fed up by the time they come to the second condition or become wise to the requirements of the experiment!

- Con : Differences between participants in the groups may affect results, for example, variations in age, gender, or social background. These differences are known as participant variables (i.e., a type of extraneous variable ).

- Control : After the participants have been recruited, they should be randomly assigned to their groups. This should ensure the groups are similar, on average (reducing participant variables).

2. Repeated Measures Design

Repeated Measures design is an experimental design where the same participants participate in each independent variable condition. This means that each experiment condition includes the same group of participants.

Repeated Measures design is also known as within-groups or within-subjects design .

- Pro : As the same participants are used in each condition, participant variables (i.e., individual differences) are reduced.

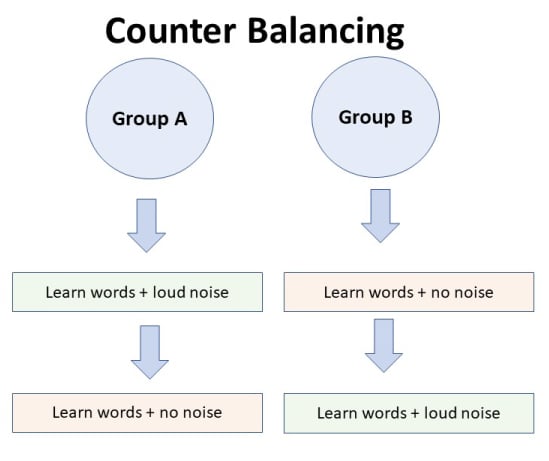

- Con : There may be order effects. Order effects refer to the order of the conditions affecting the participants’ behavior. Performance in the second condition may be better because the participants know what to do (i.e., practice effect). Or their performance might be worse in the second condition because they are tired (i.e., fatigue effect). This limitation can be controlled using counterbalancing.

- Pro : Fewer people are needed as they participate in all conditions (i.e., saves time).

- Control : To combat order effects, the researcher counter-balances the order of the conditions for the participants. Alternating the order in which participants perform in different conditions of an experiment.

Counterbalancing

Suppose we used a repeated measures design in which all of the participants first learned words in “loud noise” and then learned them in “no noise.”

We expect the participants to learn better in “no noise” because of order effects, such as practice. However, a researcher can control for order effects using counterbalancing.

The sample would be split into two groups: experimental (A) and control (B). For example, group 1 does ‘A’ then ‘B,’ and group 2 does ‘B’ then ‘A.’ This is to eliminate order effects.

Although order effects occur for each participant, they balance each other out in the results because they occur equally in both groups.

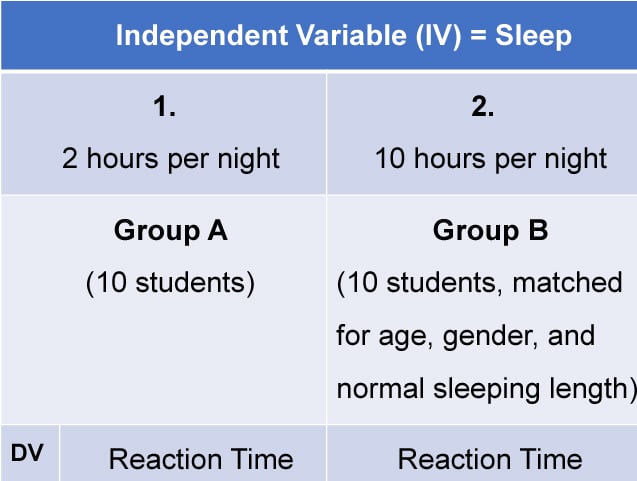

3. Matched Pairs Design

A matched pairs design is an experimental design where pairs of participants are matched in terms of key variables, such as age or socioeconomic status. One member of each pair is then placed into the experimental group and the other member into the control group .

One member of each matched pair must be randomly assigned to the experimental group and the other to the control group.

- Con : If one participant drops out, you lose 2 PPs’ data.

- Pro : Reduces participant variables because the researcher has tried to pair up the participants so that each condition has people with similar abilities and characteristics.

- Con : Very time-consuming trying to find closely matched pairs.

- Pro : It avoids order effects, so counterbalancing is not necessary.

- Con : Impossible to match people exactly unless they are identical twins!

- Control : Members of each pair should be randomly assigned to conditions. However, this does not solve all these problems.

Experimental design refers to how participants are allocated to an experiment’s different conditions (or IV levels). There are three types:

1. Independent measures / between-groups : Different participants are used in each condition of the independent variable.

2. Repeated measures /within groups : The same participants take part in each condition of the independent variable.

3. Matched pairs : Each condition uses different participants, but they are matched in terms of important characteristics, e.g., gender, age, intelligence, etc.

Learning Check

Read about each of the experiments below. For each experiment, identify (1) which experimental design was used; and (2) why the researcher might have used that design.

1 . To compare the effectiveness of two different types of therapy for depression, depressed patients were assigned to receive either cognitive therapy or behavior therapy for a 12-week period.

The researchers attempted to ensure that the patients in the two groups had similar severity of depressed symptoms by administering a standardized test of depression to each participant, then pairing them according to the severity of their symptoms.

2 . To assess the difference in reading comprehension between 7 and 9-year-olds, a researcher recruited each group from a local primary school. They were given the same passage of text to read and then asked a series of questions to assess their understanding.

3 . To assess the effectiveness of two different ways of teaching reading, a group of 5-year-olds was recruited from a primary school. Their level of reading ability was assessed, and then they were taught using scheme one for 20 weeks.

At the end of this period, their reading was reassessed, and a reading improvement score was calculated. They were then taught using scheme two for a further 20 weeks, and another reading improvement score for this period was calculated. The reading improvement scores for each child were then compared.

4 . To assess the effect of the organization on recall, a researcher randomly assigned student volunteers to two conditions.

Condition one attempted to recall a list of words that were organized into meaningful categories; condition two attempted to recall the same words, randomly grouped on the page.

Experiment Terminology

Ecological validity.

The degree to which an investigation represents real-life experiences.

Experimenter effects

These are the ways that the experimenter can accidentally influence the participant through their appearance or behavior.

Demand characteristics

The clues in an experiment lead the participants to think they know what the researcher is looking for (e.g., the experimenter’s body language).

Independent variable (IV)

The variable the experimenter manipulates (i.e., changes) is assumed to have a direct effect on the dependent variable.

Dependent variable (DV)

Variable the experimenter measures. This is the outcome (i.e., the result) of a study.

Extraneous variables (EV)

All variables which are not independent variables but could affect the results (DV) of the experiment. Extraneous variables should be controlled where possible.

Confounding variables

Variable(s) that have affected the results (DV), apart from the IV. A confounding variable could be an extraneous variable that has not been controlled.

Random Allocation

Randomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.

The principle of random allocation is to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Order effects

Changes in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:

(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;

(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

- Media Center

- Not yet translated

Experimental Design

What is experimental design.

Experimental design is a structured process used to plan and conduct experiments. By carefully controlling and manipulating variables, researchers can obtain valid and reliable results that test hypotheses and determine cause-and-effect relationships.

The Basic Idea

No matter how much time, effort, and resources you put in, a poorly designed experiment will always yield unreliable and invalid results. Arguably, the most important part of conducting an experiment is the design and planning stage; when this is done correctly, you can be more sure that what you are testing is going to give you results that you can trust.

Experimental design is the cornerstone of rigorous scientific inquiry, providing a structured and objective framework for systematically investigating phenomena, testing hypotheses, and discovering cause-and-effect relationships.

The main objective of experimental design is to establish the effect that an independent variable has on a dependent variable. 1 What does this mean, exactly?

Say, for instance, you're trying to understand how the amount of sleep someone gets at night affects their reaction times. In this scenario, the independent variable is the number of hours of sleep, while the dependent variable is reaction time—as it depends on changes in sleep. In the experiment, the independent variable (sleep) is controlled and adjusted to observe its effect on the dependent variable (reaction time). When experimental design is applied correctly, the researcher can be more confident about the causal relationship between sleep duration and reaction time.

As a general rule of thumb, setting up an experimental design includes the following four stages :

- Hypothesis: Establish a “testable idea” that you can determine is either true or false using an experiment.

- Treatment levels and variables: Define the independent variable to be manipulated, the dependent variable to be measured, and any extraneous conditions (also called nuisance variables ) that need to be controlled.

- Sampling: Specify the number of experimental units (a fancy way of saying participants) that are needed, including the population from which they will be sampled. To be able to establish causality between an independent variable and a dependent variable, the sample size needs to be large enough to provide statistical significance .

- Randomization: Decide how the experimental units will be randomly assigned to the different treatment groups—which usually receive varying “levels” of the independent variable, or perhaps none at all (this is called a control group ).

So, what distinguishes experiments from other forms of research? Of the four stages described above, the manipulation of independent variables and the random assignment of participants to different treatment groups are what truly set an experimental design apart from other approaches. 2 Meanwhile, creating a hypothesis and choosing which population to study are common processes across a range of research methodologies.

There are several different types of experimental design, depending on the circumstances and the phenomena being explored. To better understand each one, let’s refer back to our above example of testing the impact that sleep has on reaction time.

- An independent measures design (also known as between-groups) randomly assigns participants into several groups each receiving a different condition. For example, one group might get 4 hours of sleep per night, another group might get 6 hours of sleep per night, and a third group might get 8 hours of sleep per night. The researchers would then measure each group’s reaction time to assess how different amounts of sleep impact response speed.

- Meanwhile, in a repeated measures design , the same participants would experience all of the conditions. First, they might get 4 hours of sleep, then 6 hours, and finally 8 hours (in different phases of the experiment). Their reaction time would be measured after each condition to see how their performance varies depending on the amount of sleep they received.

- Finally, a matched pairs design creates pairs of participants according to key variables such as their age, gender, or socioeconomic status. For our example study, one member of each pair would get 6 hours of sleep, while the other would get 8 hours. Their reaction times would then be compared to see how sleep duration affects response speed.

Each experimental design comes with its own unique set of pros and cons. It’s up to the researcher to decide which one is best depending on the objectives of the study and the number of factors that need to be investigated.

Experimental observations are only experience carefully planned in advance, and designed to form a secure basis of new knowledge. – Sir Ronald Aylmer Fisher in The Design of Experiments (1935)

Case studies

From insight to impact: our success stories, is there a problem we can help with, about the author.

Dr. Lauren Braithwaite

Dr. Lauren Braithwaite is a Social and Behaviour Change Design and Partnerships consultant working in the international development sector. Lauren has worked with education programmes in Afghanistan, Australia, Mexico, and Rwanda, and from 2017–2019 she was Artistic Director of the Afghan Women’s Orchestra. Lauren earned her PhD in Education and MSc in Musicology from the University of Oxford, and her BA in Music from the University of Cambridge. When she’s not putting pen to paper, Lauren enjoys running marathons and spending time with her two dogs.

We are the leading applied research & innovation consultancy

Our insights are leveraged by the most ambitious organizations.

I was blown away with their application and translation of behavioral science into practice. They took a very complex ecosystem and created a series of interventions using an innovative mix of the latest research and creative client co-creation. I was so impressed at the final product they created, which was hugely comprehensive despite the large scope of the client being of the world's most far-reaching and best known consumer brands. I'm excited to see what we can create together in the future.

Heather McKee

BEHAVIORAL SCIENTIST

GLOBAL COFFEEHOUSE CHAIN PROJECT

OUR CLIENT SUCCESS

Annual revenue increase.

By launching a behavioral science practice at the core of the organization, we helped one of the largest insurers in North America realize $30M increase in annual revenue .

Increase in Monthly Users

By redesigning North America's first national digital platform for mental health, we achieved a 52% lift in monthly users and an 83% improvement on clinical assessment.

Reduction In Design Time

By designing a new process and getting buy-in from the C-Suite team, we helped one of the largest smartphone manufacturers in the world reduce software design time by 75% .

Reduction in Client Drop-Off

By implementing targeted nudges based on proactive interventions, we reduced drop-off rates for 450,000 clients belonging to USA's oldest debt consolidation organizations by 46%

Discrete Choice Experiment

Real Options Analysis

Balanced Scorecard

Deductive Research

Eager to learn about how behavioral science can help your organization?

Get new behavioral science insights in your inbox every month..

IMAGES

VIDEO

COMMENTS

Example: Quasi-experimental design. You study whether gender identity affects neural responses to infant cries. Your independent variable is a subject variable, namely the gender identity of the participants. You have three groups: men, women and other. Your dependent variable is the brain activity response to hearing infant cries.

Experimental Design. Experimental design refers to the process of planning a study to test a hypothesis, where variables are manipulated to observe their effects on outcomes. By carefully controlling conditions, researchers can determine whether specific factors cause changes in a dependent variable. Key Characteristics of Experimental Design:

Three types of experimental designs are commonly used: 1. Independent Measures. Independent measures design, also known as between-groups, is an experimental design where different participants are used in each condition of the independent variable. This means that each condition of the experiment includes a different group of participants.

Guide to Experimental Design | Overview, 5 steps & Examples. Published on December 3, 2019 by Rebecca Bevans.Revised on June 21, 2023. Experiments are used to study causal relationships.You manipulate one or more independent variables and measure their effect on one or more dependent variables.. Experimental design create a set of procedures to systematically test a hypothesis.

covariates. A covariance design (also called a concomitant variable design) is a special type of pretest posttest control group design where the pretest measure is essentially a measurement of the covariates of interest rather than that of the dependent variables. The design notation is shown in Figure 10.3, where C represents the covariates:

As a general rule of thumb, setting up an experimental design includes the following four stages: Hypothesis: Establish a "testable idea" that you can determine is either true or false using an experiment. Treatment levels and variables: Define the independent variable to be manipulated, the dependent variable to be measured, and any ...

In experimental design, a dependent variable is a responding variable that changes based upon input values from an independent variable. Some researchers call a dependent variable an "outcome variable" or "response variable" because the value of the dependent variable is intrinsically linked to upstream changes in the independent variable.

Experimental Design | Types, Definition & Examples. Published on June 9, 2024 by Julia Merkus, MA.Revised on December 4, 2024. An experimental design is a systematic plan for conducting an experiment that aims to test a hypothesis or answer a research question.. It involves manipulating one or more independent variables (IVs) and measuring their effect on one or more dependent variables (DVs ...

Experimental design is the process of carrying out research in an objective and controlled fashion so that precision is maximized and specific conclusions can be drawn regarding a hypothesis statement. Generally, the purpose is to establish the effect that a factor or independent variable has on a dependent variable.